The Internet as we know it today was invented and released to the public somewhere in the mid-1990s—and so was I, as a human being. I've grown up alongside this rapidly evolving technology since I was about 10 years old. As a teenager, I had a blast exploring the Internet, playing games, and connecting with people. Later, in my adult life, I specialized in SEO, which quickly became my primary source of income.

Knowing SEO and marketing, I always understood that the Internet was full of nonsense and shady practices driven by the relentless pursuit of profit, especially on social media. Nevertheless, for a solid two decades, it truly was a space where people could share, connect, and even tie their online activity to the real-world.

Today, however, I'm convinced that the Internet is almost death–or at least its human side. I can demonstrate this in countless ways, from personal experience to technological nuances, but let's start by structuring the topic.

Dead Internet Theory is somewhat of a conspiracy theory when taken to extremes. But at its core, it argues that much of the Internet is no longer driven by authentic human activity. Instead, it's filled with AI-generated content, bots, and automated scripts. In such a space major platforms are flooded with fake users, manufactured discourse, and artificial engagement, creating an illusion of human interaction. Its conspiracy part adds that corporations and their algorithms control most online activity already—for profits and power, of course.

Well, automation is finally here. I emphasize "finally" because, since my early days in internet marketing, there were always discussions about automating tasks like SEO link-building or social media activity to boost engagement and gain followers. However, those methods were usually ineffective and eventually penalized, proving unprofitable in the long run.

Now, with AI becoming widely accepted, we can push the boundaries as far as our imaginations allow. I won't even bother addressing the basic spam flooding our DMs or comment sections on social media, or the countless auto-generated articles saturating the web. The Internet is already overflowing with AI-driven nonsense, actively propelled by humans seeking quick gains.

The real problem and Dead Internet Theory escalates when AI starts to mimic authentic human interactions. For example, just recently, I was watching financial news on YouTube and spotted a suspicious comment thread:

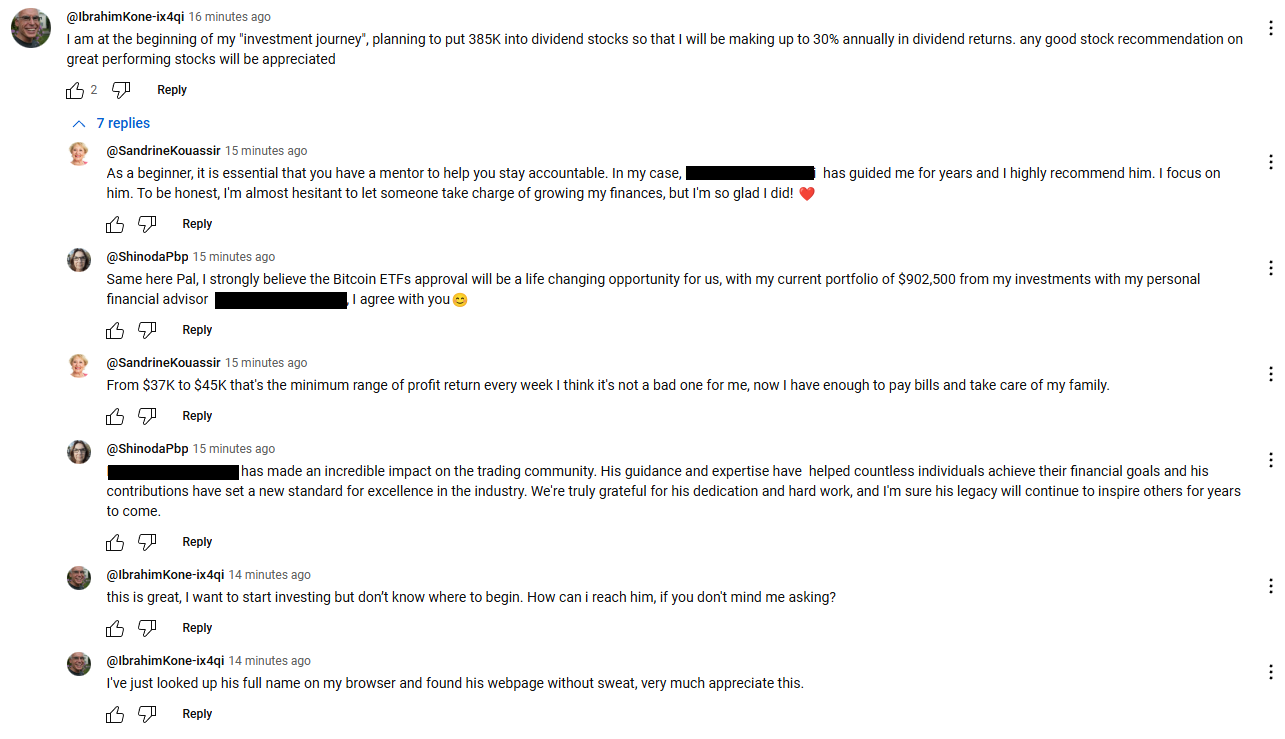

At first glance, you see three "people" exchanging helpful advice, navigating life's challenges by recommending a certain financial expert (whose name I've blurred)—sweet, right? But on closer inspection, several red flags pop up.

How could this entire detailed exchange take place almost instantly after the first comment, wrapping up in under two minutes? Is it realistic for humans to react and produce coherent replies that quickly? Additionally, isn't it odd that these "individuals" passionately recommend the same obscure expert? And finally, don't you notice the clearly structured narrative moving smoothly from problem to solution, backed by social proof? Oh, of course, there were no new comments in this thread for several days—2 minutes of sparks, then silence. That's odd.

The timing gives it away—but that's precisely the trick. After just one hour, YouTube stops showing the exact time a comment was posted, displaying only a vague "1 hour/day/week/month ago." Without external tools, there's no way to confirm exact timestamps. Thus, within just an hour, these bots have successfully mimicked genuine human interactions, cleverly promoting questionable financial advice.

Of course, this isn't an isolated incident. Similar comment threads pop up regularly on popular financial videos or other categories, each promoting something—whether products, individuals, or even political propaganda. It's becoming increasingly sophisticated and easier to orchestrate using AI. Yet this remains just the tip of the iceberg, given recent AI breakthroughs.

People are already unknowingly engaging with purely AI-generated content on social media and major platforms welcome this phenomenon because it significantly boosts engagement, translating directly into ad revenue. In China, AI-generated deepfake influencers stream 24/7, driving both engagement and e-commerce sales. It's already happening, accelerating rapidly, but the final blow to the human Internet has yet to come.

Recently, China introduced Manus—an autonomous AI agent developed by the Chinese startup Monica, launched on March 6, 2025. What's groundbreaking about Manus? It’s explicitly designed to independently execute complex real-world tasks without direct or continuous human guidance—read the last part again.

In the previous example, three bots with convincing profile images orchestrated a genuine conversation. It was a neat trick, sure, but AI agents like Manus can now:

- Create profiles (including writing bios and uploading profile photos)

- Post diverse content (text, images, videos, and even memes)

- Like, comment, and follow/unfollow other users

- Engage effectively with trending topics and hashtags

- Analyze audience engagement to optimize posting schedules

All this without "direct or continuous human guidance."

Imagine that you're an egocentric guy promoting a profile to sell a shady eBook. Now you won't need to pay for engagement groups or buy low-quality followers anymore. Instead, you'll "hire" a small army of AI agents that automatically like your nonsense, post glowing comments, and reshare your content to relevant communities. My point is that I'm certain people will misuse these powerful AI agents in their endless pursuit of money, influence, and power.

Ultimately, this could mark the end of the Internet as we know it. Humans, as creative as we may be, simply can't compete with the sheer scale and speed of AI. Anyone who has used a powerful AI model with good prompts knows it feels like magic—just seconds, and voilà.

For now, AI-generated content and characters still compete for human attention. But as AI agents become more mainstream, the human-centric Internet will fade into obscurity. I'm describing a scenario, a vicious cycle, where most active profiles become AI agents generating and interacting with content produced by other AI agents, pushing real people away.

In this way, the Internet might become busier and more active than ever, yet devoid of genuine human participation. That's Dead Internet Theory in action. From active participants and creators, we risk becoming mere passive observers and consumers. Maybe that's the plan, maybe it's just foolishness—but one thing is clear: we're rapidly heading toward it.

However, despite these concerns, it's important to remember that humans have always adapted to technological shifts, often discovering new ways to foster genuine connections and creativity. Perhaps this wave of AI will inspire us to redefine our digital presence, prioritizing meaningful, authentic interactions more intentionally than ever before. After all, the Internet's future is still unwritten—and it's ultimately in our hands to shape it positively.